Supercharge your websites with Gemini AI

Mar 17, 2024

•10 minute read•283 views

Introduction

With the sudden growth of AI-powered solutions, the world is now looking towards everything AI. From innovations like Devin, the AI software engineer, to a simple number guessing application being termed "built with AI", it does become quite overwhelming for someone who has just begun their development journey.

However, one must remember, just as with the onset of calculators, humans still need fundamental knowledge about calculations themselves, developers must also keep a strong grasp of the basics and continue grinding and learning daily.

What to build?

While one often co-relates web apps with static content, generative AI paves the path to adding creative interactivity with a small amount of code.

There can be many use cases where one can use generative AI tools.

A few examples can be listed:

amongst many others.

What do we need?

We'll be charging a web application with the help of generative AI. For today's project, here are the tools we'll be requiring.

Google's Gemini AI API keys: Gemini is Google’s long-promised, next-gen GenAI model family, developed by Google’s AI research labs DeepMind and Google Research.

Next.js by Vercel: Next.js is an open-source web development framework created by the private company Vercel providing React-based web applications with server-side rendering and static website generation.

Tailwind CSS: Tailwind CSS is a utility-first CSS framework for rapidly building modern websites without ever leaving your HTML.

Let's build

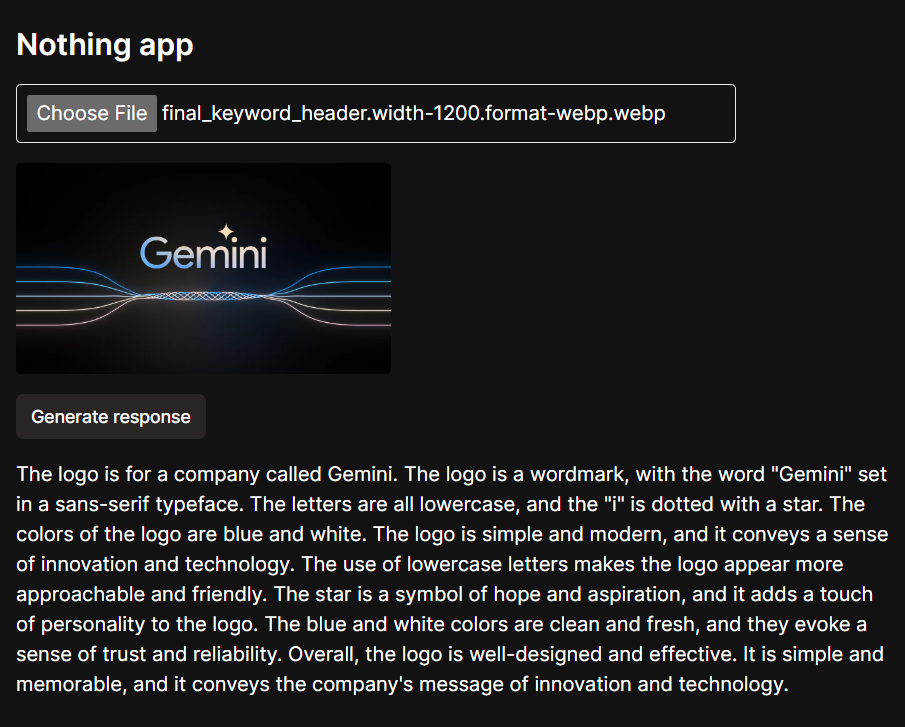

We'll be building an app so generic, it does nothing, but tell some optimistic facts about an uploaded image. Let's name it Nothing App, as it almost does nothing***.***

A working demo is live at https://devgg.in/genai

Preview (before upload):

Preview (post upload):

So let's get started!

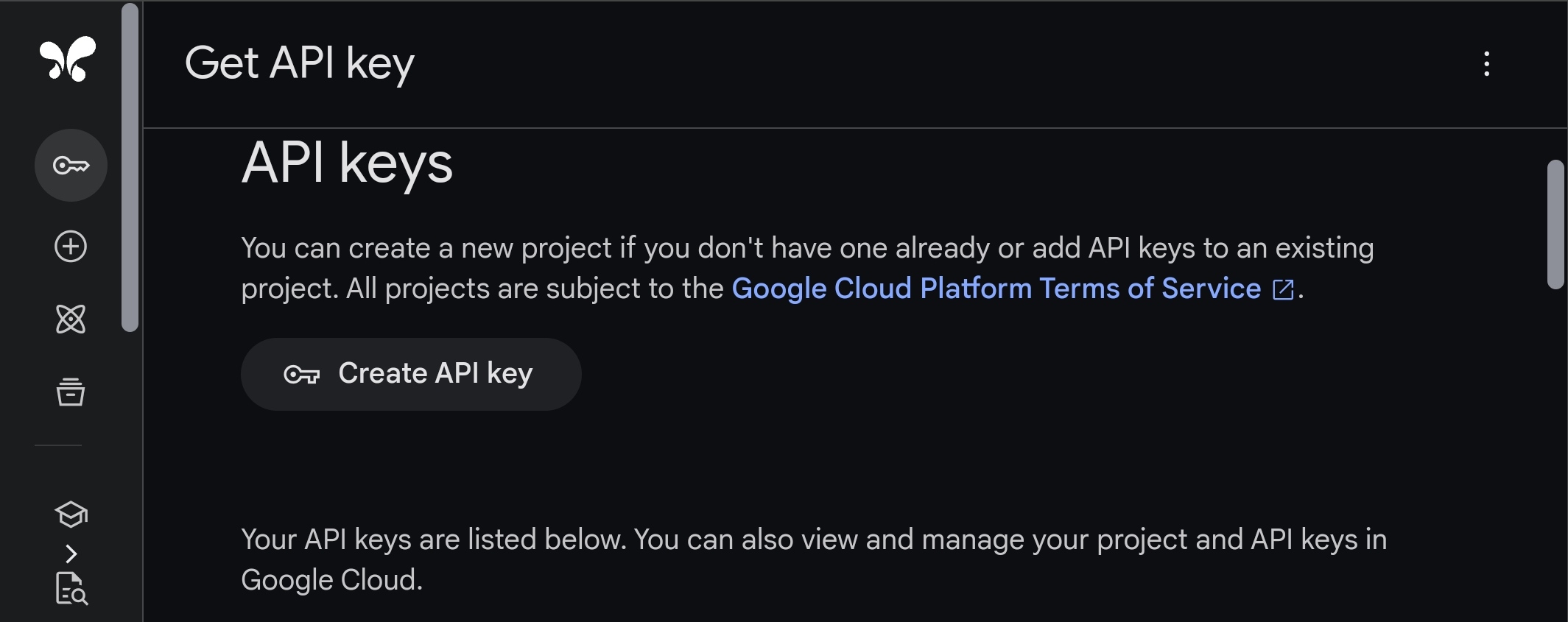

Getting the API keys

To create a generative AI app, we need access to Gemini AI API. Head over to https://aistudio.google.com/app/apikey and create a new API key.

Store the API key in a .env file under the name of GEMINI_API_KEY. If you're providing other names, make sure to use them in your code.

Building the UI

For the minimal components and the design, I've used Next.js along with Tailwind and a component library called shadcn/ui.

Setting up Next.js

To setup Next.js, we can begin by running this command on a terminal

npx create-next-app@latest

While filling out the choices, use the app router along with typescript.

Clear out the boilerplate code and install shadcn/ui.

npx shadcn-ui@latest init

Choose your preferred styles and colors and proceed ahead.

We won't be needing much apart from the button component so let's go ahead and get that setup as well.

npx shadcn-ui@latest add button

Great! Now that we've set up the UI elements, it is time to get started with coding the solution.

Building the component.

In the root folder, create a folder named components , and inside it, a file named GenAI.tsx. This file will contain a client component.

For this project, our UI is divided into 5 sections :

Header

The input element

The image preview

Generate response button

The response section.

Setting up the component, we can observe a code structured like this:

type remark = {

result: string;

}

const GenAI: NextPage = () => {

const [file, setFile] = useState<File | null>(null);

const [loading, setLoading] = useState(false);

const [remark, setRemark]= useState<remark|null>(null)

return (

<section className='flex flex-col my-10 gap-y-4 relative'>

<h2 className='text-2xl font-semibold'>Nothing app</h2>

<form

method='POST'

encType='multipart/form-data'

className='flex flex-col items-start justify-start gap-4 w-full max-w-xl'

>

<input

type='file'

name='avatar'

accept='image/*'

className='border rounded w-full p-2'

/>

<Button

variant={"secondary"}

size={"sm"}

disabled={loading || !file}>

{loading ? "Loading..." : "Generate response"}

</Button>

</form>

<p>Some result goes here.</p>

</section>

);

}

To build the image preview, we'll be using base64 encoding for this purpose. Let's create a function titled toBase64 which takes in a file and returns base64 string.

const toBase64 = async (file: File) => {

return new Promise((resolve, reject) => {

const fileReader = new FileReader();

fileReader.readAsDataURL(file);

fileReader.onload = () => {

resolve(fileReader.result);

};

fileReader.onerror = (error) => {

reject(error);

};

});

};

Now to preview the file, we need to make some modifications to our GenAI component.

//... rest code

const [base64, setBase64] = useState<string | null>(null);

const onFileChange = (e: React.ChangeEvent<HTMLInputElement>) => {

if (!e.target.files) {

return;

}

setRemark(() => null);

setFile(e.target.files[0]);

};

useEffect(() => {

async function run() {

// Convert the file to base64

if (file) {

toBase64(file as File)

.then((res) => setBase64(res as string));

}

}

run();

}, [file]);

// ... rest of code

We also need to setup the event handlers for our UI to work great!

Since gemini pro vision accepts images in array buffer mode, we need to setup a function to convert the file to an array buffer format

// When the file is selected, set the file state

const onFileChange = (e: React.ChangeEvent<HTMLInputElement>) => {

if (!e.target.files) {

return;

}

setRemark(() => null);

setFile(e.target.files[0]);

};

const handleSubmit = async (e: React.FormEvent<HTMLFormElement>) => {

e.preventDefault();

setRemark(null);

if (!file) {

return;

}

setLoading(true);

// You can upload the base64 to your server here

let response = await fetch("/api/genai", {

method: "POST",

body: JSON.stringify({ image: await generativePart(file) }),

headers: {

"Content-Type": "application/json",

},

});

setLoading(false);

if (!response.ok) {

setRemark({

result:

"Something went wrong. Try checking the file size and ensure you dont upload any trouble causing stuff. If issue persists, try using a different image",

});

throw new Error(`An error occurred: ${response.status}`);

} else {

let data = await response.json();

setRemark(() => data);

}

};

/* since the gemini pro vision accepts array buffer data, we need to

convert the file to array buffer string */

const generativePart = async (file: File) => {

let f = await file.arrayBuffer();

const imageBase64 = Buffer.from(f).toString("base64");

return {

inlineData: {

data: imageBase64,

mimeType: "image/png",

},

};

};

Finally, our component looks like this!

"use client";

import { Button } from "@/components/ui/button";

import { Delete, Trash2 } from "lucide-react";

import type { NextPage } from "next";

import { useEffect, useState } from "react";

type response = {

result: string;

}

const GenAI: NextPage = () => {

// State to store the file

const [file, setFile] = useState<File | null>(null);

const [loading, setLoading] = useState(false);

// State to store the base64

const [base64, setBase64] = useState<string | null>(null);

const [remark, setRemark] = useState<response | null>(null);

// When the file is selected, set the file state

const onFileChange = (e: React.ChangeEvent<HTMLInputElement>) => {

if (!e.target.files) {

return;

}

setRemark(() => null);

setFile(e.target.files[0]);

};

// On submit, upload the file

const handleSubmit = async (e: React.FormEvent<HTMLFormElement>) => {

e.preventDefault();

setRemark(null);

if (!file) {

return;

}

setLoading(true);

// You can upload the base64 to your server here

let response = await fetch("/api/genai", {

method: "POST",

body: JSON.stringify({ image: await generativePart(file) }),

headers: {

"Content-Type": "application/json",

},

});

setLoading(false);

if (!response.ok) {

setRemark({

result:

"Something went wrong. Try checking the file size and ensure you dont upload any trouble causing stuff. If issue persists, try using a different image",

});

throw new Error(`An error occurred: ${response.status}`);

} else {

let data = await response.json();

setRemark(() => data);

}

};

useEffect(() => {

async function run() {

// Convert the file to base64

if (file) {

toBase64(file as File).then((res) => setBase64(res as string));

}

}

run();

}, [file]);

const resetFile = () => {

setRemark(null);

setFile(null);

setBase64(null);

};

return (

<section className='flex flex-col my-10 gap-y-4 relative'>

<h2 className='text-2xl font-semibold'>Nothing app</h2>

<form

method='POST'

encType='multipart/form-data'

onSubmit={handleSubmit}

className='flex flex-col items-start justify-start gap-4 w-full max-w-xl

>

<input

type='file'

name='avatar'

accept='image/*'

className='border rounded w-full p-2'

onChange={onFileChange}

/>

{base64 && (

<div className='group relative' onClick={resetFile}>

<div className='overlay invisible w-full h-full bg-black/40 border border-red-500 group-hover:visible duration-75 grid place-items-center absolute rounded'>

<Trash2 className='h-6 w-6 text-red-500' />

</div>

<img

src={base64}

width={300}

height={400}

className='rounded'

alt='Uploaded Image'

/>

</div>

)}

<Button variant={"secondary"} size={"sm"} disabled={loading || !file}>

{loading ? "Loading..." : "Generate response"}

</Button>

</form>

<p>{remark?.result}</p>

</section>

);

};

// Convert a file to base64 string

const toBase64 = async (file: File) => {

return new Promise((resolve, reject) => {

const fileReader = new FileReader();

fileReader.readAsDataURL(file);

fileReader.onload = () => {

resolve(fileReader.result);

};

fileReader.onerror = (error) => {

reject(error);

};

});

};

const generativePart = async (file: File) => {

let f = await file.arrayBuffer();

const imageBase64 = Buffer.from(f).toString("base64");

return {

inlineData: {

data: imageBase64,

mimeType: "image/png",

},

};

};

export default GenAI;

Setting up the server

Inside our app directory, we create a folder called api. In this folder, we create another folder named genai to setup our route. The folder structure looks something like this:

├───app

└───api

└───genai

└───route.ts

To be able to use the gemini pro vision, the node package is required. We can install it to our project, we need to run the add command.

yarn add @google/generative-ai

Once added, we need to import it into our route.ts file

import { GoogleGenerativeAI, HarmBlockThreshold, HarmCategory } from '@google/generative-ai'

Since our application would require user input, we would be using the POST event handler.

import { NextRequest, NextResponse} from 'next/server';

This particular route handler just echoes back the input. You can probably test the response here to check for a proper payload.

Now, we need to create a function that will make a call to the Gemini and return a response.

async function run() {

const genAI = new GoogleGenerativeAI(`${process.env.GEMINI_API_KEY}`);

const safetySettings = [

{

category: HarmCategory.HARM_CATEGORY_HARASSMENT,

threshold: HarmBlockThreshold.BLOCK_MEDIUM_AND_ABOVE,

},

{

category: HarmCategory.HARM_CATEGORY_SEXUALLY_EXPLICIT,

threshold: HarmBlockThreshold.BLOCK_MEDIUM_AND_ABOVE,

},

{

category: HarmCategory.HARM_CATEGORY_DANGEROUS_CONTENT,

threshold: HarmBlockThreshold.BLOCK_MEDIUM_AND_ABOVE,

},

{

category: HarmCategory.HARM_CATEGORY_HATE_SPEECH,

threshold: HarmBlockThreshold.BLOCK_MEDIUM_AND_ABOVE,

},

];

// For text-and-image input (multimodal), use the gemini-pro-vision model

const model = genAI.getGenerativeModel({

model: "gemini-pro-vision",

safetySettings,

});

const prompt = `Given the picture below, you should become creative and be comforting

and nurturing whatever is shown in the picture and make a nice comment about it.

Make sure to have an elaborate explanation in not more than 100 words.

Also do not generate anything that is unsafe or hateful.`;

const imageParts: string[] = [data.image];

const result = await model

.generateContent([prompt, ...imageParts])

.catch((err) => {

throw new Error(`An error occured : ${err}`);

});

const response = result.response;

const text = response.text();

return text;

}

Let's understand some parts of the function.

Safety settings are optional. It allows us to check the type of content that should be allowed as a response to our project.

The

promptstring should contain your context and the correct prompt. From the UI you can accept a part of the prompt which we can append to the context, and any other examples we might add to finally create a solid prompt.The

imagePartscan also be a string array, all you need to do is spread the image parts variable thereby sending multiple images to run your prompt on.

Finally integrating our newly created run() function in our route handler, we get something like this:

import { NextRequest, NextResponse } from "next/server";

import {

GoogleGenerativeAI,

HarmBlockThreshold,

HarmCategory,

} from "@google/generative-ai";

export async function POST(req: NextRequest) {

const data = await req.json();

async function run() {

const genAI = new GoogleGenerativeAI(`${process.env.GEMINI_API_KEY}`);

const safetySettings = [

{

category: HarmCategory.HARM_CATEGORY_HARASSMENT,

threshold: HarmBlockThreshold.BLOCK_MEDIUM_AND_ABOVE,

},

{

category: HarmCategory.HARM_CATEGORY_SEXUALLY_EXPLICIT,

threshold: HarmBlockThreshold.BLOCK_MEDIUM_AND_ABOVE,

},

{

category: HarmCategory.HARM_CATEGORY_DANGEROUS_CONTENT,

threshold: HarmBlockThreshold.BLOCK_MEDIUM_AND_ABOVE,

},

{

category: HarmCategory.HARM_CATEGORY_HATE_SPEECH,

threshold: HarmBlockThreshold.BLOCK_MEDIUM_AND_ABOVE,

},

];

// For text input, use the gemini-pro model

const model = genAI.getGenerativeModel({

model: "gemini-pro-vision",

safetySettings,

});

const prompt = `Given the picture below, you should become creative and be comforting

and nurturing whatever is shown in the picture and make a nice comment about it.

Make sure to have an elaborate explanation in not more than 100 words.

Also do not generate anything that is unsafe or hateful.`;

const imageParts: string[] = [data.image];

const result = await model

.generateContent([prompt, ...imageParts])

.catch((err) => {

throw new Error(`An error occured : ${err}`);

});

const response = result.response;

const text = response.text();

return text;

}

let result = await run();

return NextResponse.json({ result });

}

Now that both our sever function and UI are ready, we are ready to test it out and deploy it to a provider.

Run a local build to check for build errors. Run the build with :

yarn build

Start the production build with :

yarn start

Congratulations!!

You've successfully created a generative AI application with Next.js and Gemini AI. You can use the gemini-pro model to create text-based applications like a poetry generator or an alternate reality generator, maybe even a text analyzer.